NOTE: the following histogram matching method is almost certainly not novel in the field of astrophotography post-processing given the amount of development effort put into processing applications. It is already used - for example, in medical imaging where images taken at different times and exposure conditions (leading to differences in contrast, brightness, etc) need to be compared to track changes.

It occurred to me (almost certainly not the first) that a similar histogram matching approach as used in medical imaging might be useful in order to circumvent the laborious manipulation normally associated with 'stretching' an astronomical image. Accordingly, I wrote some code to implement a histogram matching function.

|

| Histogram Matching Application |

PLEASE NOTE: the above application is just for experimental purposes and is not suitable for other use. Do not ask for a copy - unless you like being offended by a non-response :-)

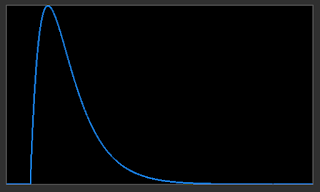

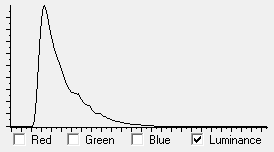

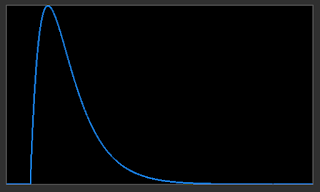

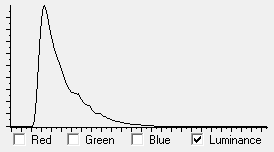

The application allowed the generation of a target histogram with various rise-times and delays in terms of their shapes. Two examples are given below...

Using Cumulative Distribution Functions to Match Histograms

The process is as follows...

- Calculate histogram of original linear image. The data is processed in 16-bit unsigned values - so there are 65536 values in the histogram.

- Calculate CDF of the linear histogram - also with 65536 values.

- Calculate CDF of the generated target histogram (as displayed as examples above).

- Create a re-mapping table by stepping through the linear image CDF values (for levels 0 - 65535) and reading out the CDF value. Then scan through the CDF of the generated target and find the nearest value to the linear CDF value. The index (0 - 65535) becomes the remapped value.

- For every pixel in the linear image data, look up the entry in the re-mapping table which corresponds to its value and place in a matched image data set.

- Display the matched image data.

No optimisation of speed nor determination of the most appropriate generated target histogram has been done. Just 'in principle' experiments.

The result of matching the original linear image data (M42: 2.5 minutes integration Seestar S50) histogram to left-most example given above is shown below.

|

| Result of Histogram Matching Using CDF of Generated Target Histogram |

Comparing the

generated target histogram against the histogram matched

linear image shows a close match.

| | Target Histogram |

|  | | Linear Data Histogram After Matching |

|

Of note in the 'histogram matched' image is that the low-level nebulosity is visible at the same time as the high level detail (around the Trapezium) is preserved. This is a surprisingly good result. On the downside is the washed-out look in terms of colour. Just why is unknown at the time of writing.

Certainly this is a vast improvement over the previous first attempts at re-mapping using the CDF directly.

Some manipulation in GIMP results in the following image...

|

| Result of Histogram Matched Image Processed by GIMP |

I am pretty pleased with this result.

It may be possible to avoid trying to find the best generated target histogram by analysing good example images of targets and calculating the target histogram directly from those good example images. Perhaps a library of target histograms could be built making post-processing a simple exercise of auto stretching via CDFs with final tweaks in external programs such as GIMP as in the above example.

Interesting...